Genetic algorithms represent a revolutionary approach to problem-solving, mimicking natural evolution to find optimal solutions across countless applications worldwide. 🧬

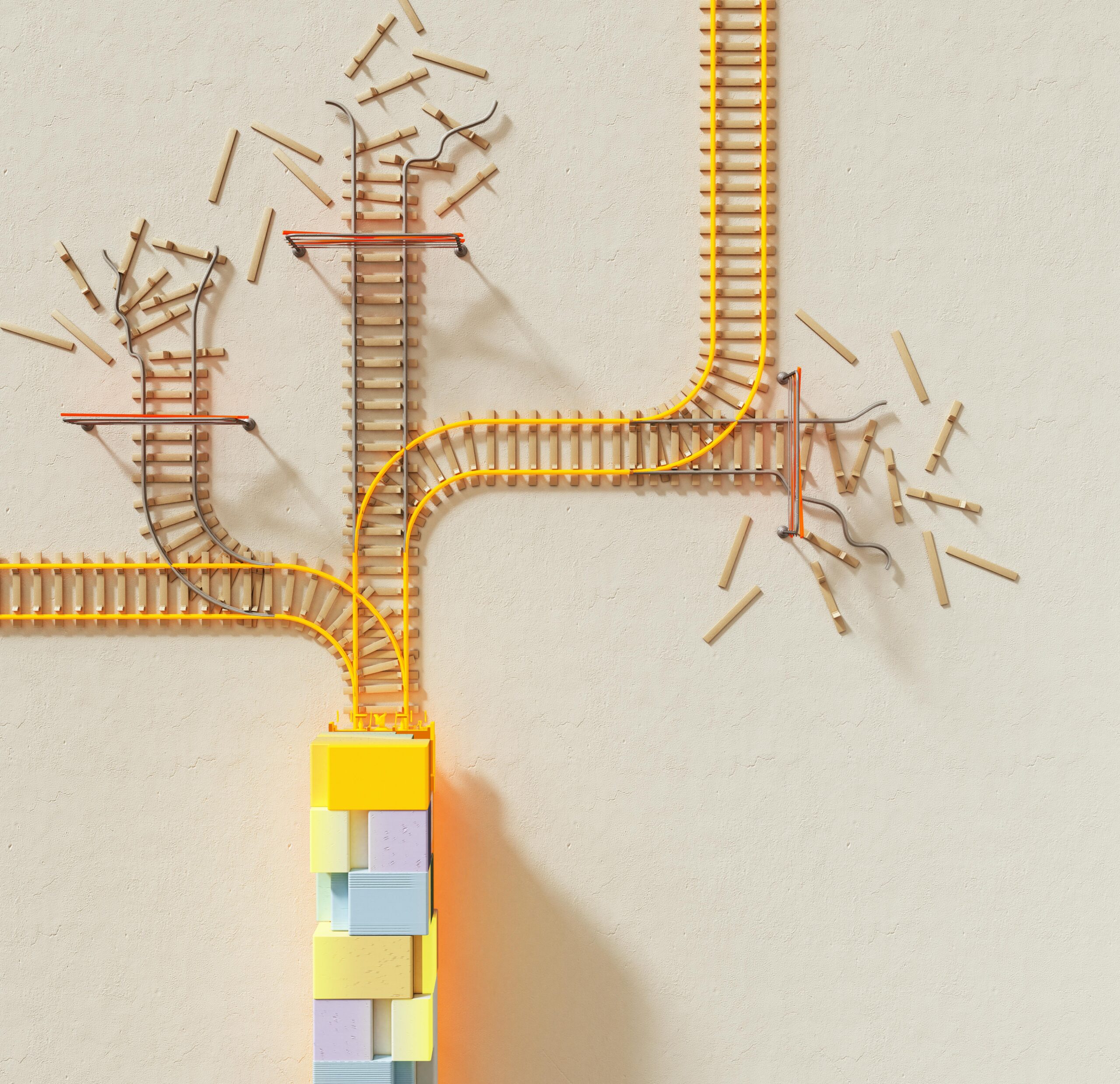

As we stand at the intersection of artificial intelligence and biotechnology, the power of genetic algorithms has become undeniable. These computational methods, inspired by Charles Darwin’s theory of natural selection, have transformed how we approach complex optimization problems in fields ranging from drug discovery to urban planning. However, with great power comes great responsibility, and the ethical deployment of these technologies has never been more critical.

The urgency to harness genetic algorithms responsibly stems from their increasing influence on decisions that affect human lives, environmental sustainability, and societal structures. When implemented without proper ethical frameworks, these powerful tools can perpetuate biases, create unintended consequences, and exacerbate existing inequalities. Understanding how to unlock their potential while maintaining ethical integrity is essential for researchers, developers, and organizations alike.

The Fundamental Architecture of Genetic Algorithms 🔬

Genetic algorithms operate through a process that mirrors biological evolution. They begin with a population of potential solutions, evaluate their fitness according to predefined criteria, and then use selection, crossover, and mutation operations to create new generations of increasingly better solutions. This iterative process continues until an optimal or satisfactory solution emerges.

The beauty of genetic algorithms lies in their versatility. Unlike traditional algorithms that follow predetermined paths, genetic algorithms explore multiple solution spaces simultaneously, making them exceptionally effective for complex, multi-dimensional problems where conventional approaches struggle. They excel in scenarios involving scheduling optimization, resource allocation, machine learning model training, and design engineering.

What distinguishes modern genetic algorithms from their predecessors is their enhanced computational efficiency and adaptability. Today’s implementations incorporate parallel processing, advanced mutation strategies, and hybrid approaches that combine genetic algorithms with other optimization techniques. This evolution has expanded their applicability while simultaneously raising important questions about responsible implementation.

Ethical Foundations: Building Responsibility Into the Code 💡

Responsible genetic algorithms begin with intentional design choices that prioritize ethical considerations from the outset. This means incorporating fairness metrics, transparency mechanisms, and accountability measures directly into the algorithmic architecture rather than treating ethics as an afterthought.

The first principle of responsible genetic algorithm development involves comprehensive stakeholder analysis. Developers must identify who will be affected by the algorithm’s decisions and ensure diverse perspectives inform the fitness functions and constraints. This inclusive approach helps prevent the algorithm from optimizing for outcomes that benefit some groups while disadvantaging others.

Transparency represents another cornerstone of ethical genetic algorithm implementation. While the stochastic nature of these algorithms can make their decision-making processes seem opaque, responsible developers document selection criteria, mutation rates, and convergence patterns. This documentation enables external auditing and helps identify potential biases or unintended optimization pathways.

Implementing Fairness Constraints in Selection Mechanisms

Traditional genetic algorithms optimize solely for performance metrics, but responsible implementations incorporate fairness constraints that prevent discriminatory outcomes. These constraints act as guardrails, ensuring that the algorithm’s evolutionary process doesn’t converge on solutions that violate ethical principles or regulatory requirements.

Fairness-aware genetic algorithms might include protected attributes in their evaluation functions, actively monitoring how different demographic groups are affected by proposed solutions. For example, in hiring optimization scenarios, the algorithm would track whether candidate selection patterns disproportionately exclude certain groups, automatically adjusting its evolutionary trajectory when disparate impact is detected.

Real-World Applications Demanding Ethical Vigilance ⚖️

Healthcare represents one of the most sensitive domains for genetic algorithm deployment. These algorithms optimize treatment protocols, drug dosages, and resource allocation in hospitals. A responsible approach requires balancing efficiency gains with patient safety, ensuring that optimization doesn’t inadvertently prioritize cost reduction over care quality.

In pharmaceutical research, genetic algorithms accelerate drug discovery by exploring vast chemical compound spaces. Ethical implementation here means establishing clear boundaries around testing protocols, ensuring that optimization for efficacy doesn’t compromise safety standards, and maintaining transparency about algorithmic recommendations to human researchers who make final decisions.

Financial services increasingly employ genetic algorithms for portfolio optimization, risk assessment, and fraud detection. Responsible deployment in this sector requires algorithms that don’t perpetuate historical biases encoded in training data, such as discriminatory lending patterns. Financial institutions must implement continuous monitoring systems that detect when algorithms generate outcomes with disparate impact across demographic groups.

Environmental Sustainability and Urban Planning

Genetic algorithms optimize energy grid management, transportation networks, and urban development plans. These applications carry profound implications for community wellbeing and environmental sustainability. Responsible implementation demands that algorithms balance efficiency with equity, ensuring that optimized solutions don’t disproportionately burden vulnerable communities with pollution or inadequate services.

Smart city initiatives leverage genetic algorithms to optimize traffic flow, waste management, and public service delivery. The ethical imperative here involves ensuring that algorithmic optimizations serve all residents equitably, not just those in affluent neighborhoods with better data infrastructure. Developers must actively combat the tendency of algorithms to optimize for areas with richer data availability.

The Data Foundation: Garbage In, Responsibility Out 📊

The quality and representativeness of training data fundamentally determines whether genetic algorithms produce responsible outcomes. Biased, incomplete, or unrepresentative datasets inevitably lead algorithms toward suboptimal or discriminatory solutions, regardless of how well-intentioned the implementation.

Responsible data practices for genetic algorithms include rigorous auditing of data sources, active efforts to identify and mitigate historical biases, and continuous validation that datasets represent the populations affected by algorithmic decisions. This might involve oversampling underrepresented groups, applying statistical techniques to reweight observations, or incorporating synthetic data to fill gaps.

Data provenance tracking enables developers to understand how different data sources influence algorithmic behavior. When genetic algorithms produce unexpected or concerning results, comprehensive provenance documentation allows teams to trace problems back to specific data inputs, facilitating targeted interventions rather than wholesale algorithmic redesigns.

Governance Frameworks: From Principles to Practice 🏛️

Translating ethical principles into operational practices requires robust governance frameworks that guide genetic algorithm development, deployment, and monitoring. These frameworks establish clear roles, responsibilities, and decision-making processes throughout the algorithmic lifecycle.

Effective governance begins with cross-functional ethics committees that review genetic algorithm projects before deployment. These committees should include technical experts, ethicists, legal advisors, and representatives from affected communities. Their mandate extends beyond approving or rejecting projects to actively shaping algorithmic design to align with organizational values and societal expectations.

- Establish clear ethical guidelines specific to genetic algorithm applications in your domain

- Create review processes that evaluate algorithms before deployment and at regular intervals

- Implement monitoring systems that detect drift from intended outcomes or emerging ethical concerns

- Develop transparent communication protocols for explaining algorithmic decisions to stakeholders

- Build feedback mechanisms that allow affected parties to report concerns and trigger reviews

- Maintain documentation standards that enable independent auditing and accountability

Continuous Monitoring and Adaptive Governance

Genetic algorithms evolve over time as they process new data and adapt to changing environments. Static governance frameworks prove inadequate for these dynamic systems. Responsible organizations implement continuous monitoring that tracks algorithmic performance across multiple dimensions including accuracy, fairness, efficiency, and societal impact.

Adaptive governance means establishing triggers that automatically initiate reviews when algorithms exhibit concerning patterns. These might include sudden changes in decision distributions, declining performance for specific subgroups, or user feedback indicating problems. When triggers activate, governance protocols should pause algorithmic operations pending investigation and potential redesign.

Balancing Innovation with Precaution: The Responsible Path Forward 🚀

The tension between innovation and precaution represents perhaps the greatest challenge in responsible genetic algorithm development. Organizations face pressure to deploy these powerful tools quickly to gain competitive advantages, yet rushing implementation without adequate ethical safeguards creates significant risks.

A responsible innovation framework embraces staged deployment approaches. Rather than immediately applying genetic algorithms to high-stakes decisions, organizations can begin with lower-risk applications, carefully monitoring outcomes and refining ethical safeguards before expanding to more sensitive domains. This graduated approach builds institutional knowledge about responsible implementation while minimizing potential harms.

Sandbox environments provide valuable spaces for experimenting with genetic algorithms under controlled conditions. These testing grounds allow developers to explore algorithmic behavior with diverse datasets, stress-test fairness constraints, and identify potential failure modes before real-world deployment. Organizations should resist pressure to bypass sandbox phases, recognizing that thorough testing represents an investment in long-term responsible innovation.

Cultivating Ethical Expertise Across Technical Teams 🎓

Technical proficiency alone proves insufficient for responsible genetic algorithm development. Organizations must cultivate ethical literacy across their technical teams, ensuring that developers, data scientists, and engineers understand the societal implications of their work and possess frameworks for navigating ethical dilemmas.

Ethics training for technical teams should move beyond abstract principles to practical case studies specific to genetic algorithm applications. Developers benefit from examining real-world scenarios where algorithms produced unintended consequences, analyzing what went wrong, and exploring alternative design choices that might have prevented problems.

Cross-disciplinary collaboration enriches genetic algorithm development by bringing diverse perspectives to bear on design decisions. Pairing data scientists with ethicists, social scientists, or domain experts from affected communities generates insights that purely technical teams might overlook. These collaborations help identify potential fairness issues, anticipate unintended consequences, and design more robust evaluation metrics.

Measuring Success Beyond Traditional Metrics 📈

Conventional genetic algorithm evaluation focuses on convergence speed, solution optimality, and computational efficiency. Responsible implementations expand success metrics to include fairness indicators, transparency scores, and stakeholder satisfaction measures that capture broader societal impacts.

Multidimensional evaluation frameworks acknowledge that optimal solutions from a purely technical perspective may prove suboptimal when ethical considerations are incorporated. A hiring algorithm might identify candidates predicted to perform marginally better, but if those predictions rely on biased proxies, the “optimal” solution perpetuates discrimination. Responsible evaluation recognizes such outcomes as failures despite their technical performance.

| Metric Category | Traditional Focus | Responsible Expansion |

|---|---|---|

| Performance | Accuracy, precision, recall | Accuracy across demographic subgroups, worst-case performance |

| Efficiency | Computational resources, convergence speed | Environmental impact, accessibility of benefits |

| Robustness | Performance under varying conditions | Resilience to adversarial manipulation, fairness under distribution shift |

| Transparency | Documentation of methodology | Explainability of decisions, auditability of processes |

The Collaborative Future: Building Responsible AI Ecosystems 🌍

No single organization can tackle the challenges of responsible genetic algorithm development in isolation. The complexity of ethical considerations, the rapid pace of technological advancement, and the far-reaching societal implications demand collaborative approaches that bring together stakeholders across sectors and disciplines.

Industry consortiums focused on responsible AI provide valuable forums for sharing best practices, developing common standards, and coordinating responses to emerging ethical challenges. These collaborative spaces allow organizations to learn from each other’s experiences, collectively advancing the field’s ethical maturity beyond what any single entity could achieve independently.

Academic partnerships enrich responsible genetic algorithm development by contributing rigorous research on fairness metrics, bias mitigation techniques, and ethical frameworks. Universities and research institutions can explore questions too fundamental or long-term for commercial entities to prioritize, generating insights that benefit the entire field.

Regulatory engagement represents another crucial dimension of collaborative responsibility. Rather than viewing regulation as an external constraint, forward-thinking organizations actively participate in policy development, sharing technical expertise that helps regulators craft informed, effective rules. This proactive engagement produces better regulations while positioning organizations as responsible industry leaders.

Empowering Individuals Through Algorithmic Literacy 💪

Responsible genetic algorithm deployment extends beyond developer obligations to include empowering individuals affected by these systems. When people understand how algorithms shape decisions that impact their lives, they can better advocate for their interests, identify problems, and hold organizations accountable.

Algorithmic literacy initiatives educate the public about genetic algorithms and their applications, demystifying these technologies without requiring technical expertise. Such programs help people recognize when they encounter algorithmic decision-making, understand their rights, and know how to seek recourse when algorithms produce harmful outcomes.

Transparency in algorithmic deployment supports individual empowerment by informing people when genetic algorithms influence decisions affecting them. Organizations should clearly communicate algorithmic involvement in hiring, lending, healthcare, and other sensitive domains, providing accessible explanations of how algorithms operate and what factors influence their recommendations.

Turning Principles Into Lasting Impact 🌟

The journey toward responsible genetic algorithms requires sustained commitment extending far beyond initial development. Organizations must embed ethical considerations into their cultures, making responsibility a core value that guides decisions across all levels and persists beyond individual projects or personnel changes.

Leadership commitment proves essential for sustaining responsible practices. When executives prioritize ethics alongside performance and profitability, they signal that responsible innovation represents a strategic imperative rather than a compliance checkbox. This top-down support enables teams to invest time and resources in ethical safeguards without fearing that such investments will be viewed as obstacles to progress.

The transformative potential of genetic algorithms remains immense, offering solutions to pressing challenges in healthcare, environmental sustainability, economic development, and beyond. By embracing responsibility as a fundamental aspect of innovation rather than a constraint upon it, we unlock even greater impact—creating algorithmic systems that not only solve problems efficiently but do so in ways that reflect our highest values and serve the broadest possible good.

The path forward demands vigilance, humility, and continuous learning. As genetic algorithms grow more sophisticated and their applications more pervasive, our ethical frameworks must evolve in parallel. By building responsibility into the foundation of genetic algorithm development, we ensure these powerful tools amplify human potential while respecting human dignity, ultimately creating a future where technological innovation and ethical integrity advance together.

Toni Santos is a consciousness-technology researcher and future-humanity writer exploring how digital awareness, ethical AI systems and collective intelligence reshape the evolution of mind and society. Through his studies on artificial life, neuro-aesthetic computing and moral innovation, Toni examines how emerging technologies can reflect not only intelligence but wisdom. Passionate about digital ethics, cognitive design and human evolution, Toni focuses on how machines and minds co-create meaning, empathy and awareness. His work highlights the convergence of science, art and spirit — guiding readers toward a vision of technology as a conscious partner in evolution. Blending philosophy, neuroscience and technology ethics, Toni writes about the architecture of digital consciousness — helping readers understand how to cultivate a future where intelligence is integrated, creative and compassionate. His work is a tribute to: The awakening of consciousness through intelligent systems The moral and aesthetic evolution of artificial life The collective intelligence emerging from human-machine synergy Whether you are a researcher, technologist or visionary thinker, Toni Santos invites you to explore conscious technology and future humanity — one code, one mind, one awakening at a time.